Modern IT environments generate an unmanageable deluge of potential weaknesses. Large enterprises stare down a quarter of a million open vulnerabilities at any given time. Of those, they can typically remediate only about 10%.

This situation has created a dangerous state of vulnerability overload and alert fatigue, which leaves our best and brightest security professionals buried under a mountain of low-context alerts, unable to focus on the threats that pose a genuine, existential risk.

In a much-needed response to this challenge, the industry has rallied around a new and powerful strategic paradigm: CTEM (continuous threat exposure management). CTEM is a crucial and necessary development, representing a profound change in perspective; it moves away from a defender’s impossible task of securing everything and toward an attacker’s mindset of how to compromise critical operations.

The philosophy is sound. By aligning security with business context, CTEM empowers organizations to move from the inefficient posture of fixing everything to a strategic one of fixing what matters most. Gartner’s compelling prediction that organizations prioritizing investments in a CTEM program will be “3x times less likely to suffer a breach” by 2026 provides a powerful justification for this new direction.

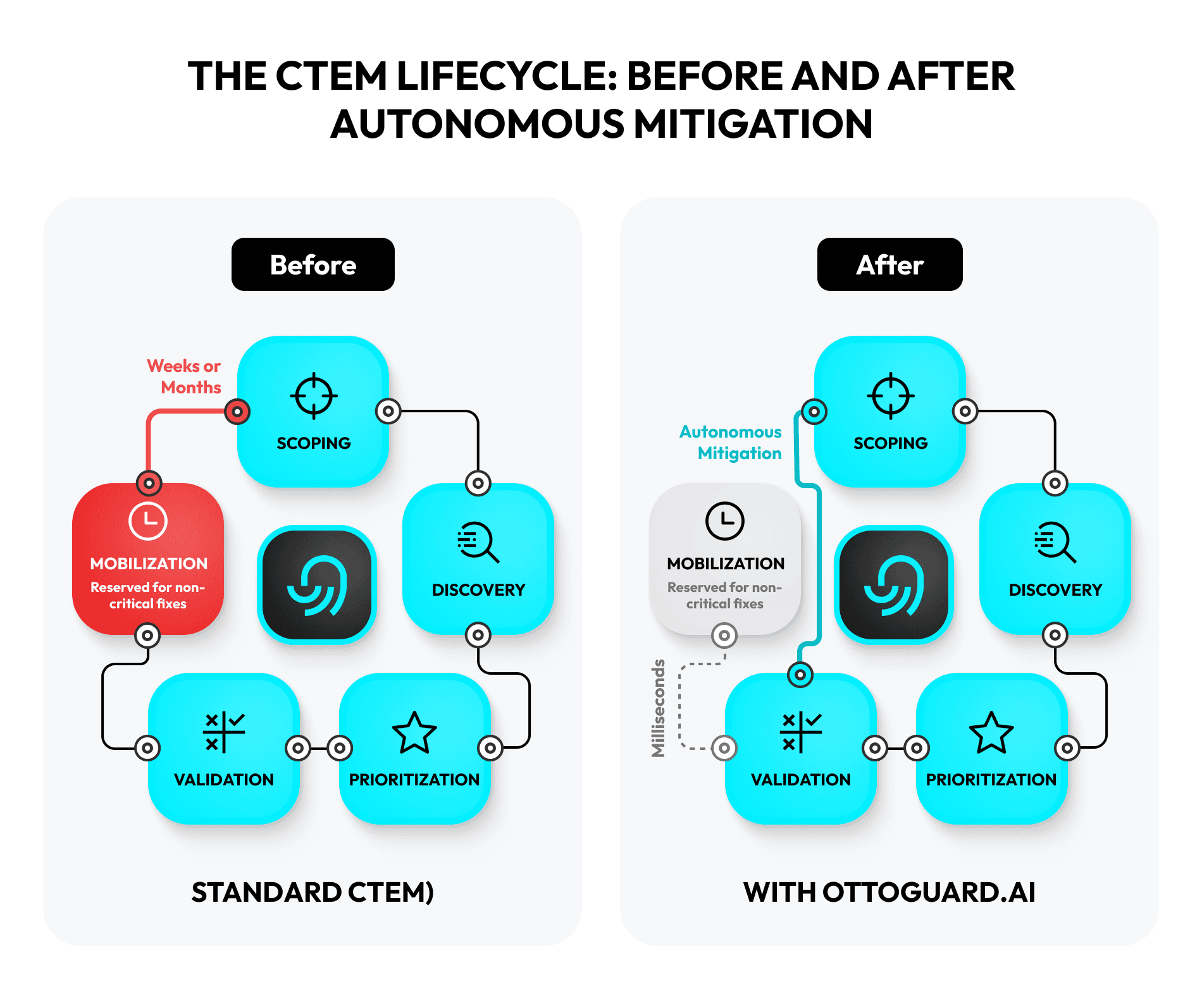

While CTEM provides a critical framework for sanity in a chaotic world, its traditional five-stage lifecycle — scoping, discovery, prioritization, validation, and mobilization — still contains a fundamental, friction-filled bottleneck: the final, arduous step of remediation. The goal cannot be to get better at managing a continuous cycle of discovery and response; it must be to break the cycle altogether.

This is where we must elevate the conversation. The industry debate is currently centered on exposure management vs. vulnerability management. But this is yesterday’s argument. The truly transformative discussion we need to have is exposure management vs. autonomous exposure mitigation.

It’s time to architect a future where we move beyond managing risk to eliminating it at its source — promptly, deterministically, and without the operational drag that has plagued us for a generation.

The Tyranny of the Final Mile: The Deep Pains of Mobilization

The CTEM framework is a significant leap forward; let there be no doubt. Its focus on business context, attack path analysis, and risk-based prioritization is indeed the correct one.

The first four stages of the lifecycle — scoping, discovery, prioritization, and validation — are about generating high-fidelity intelligence and helping you to map your sprawling, dynamic attack surface. Doing so allows you to pinpoint the choke points, the vital few exposures that, if remediated, can ensure the neutralization of multiple potential attack vectors. In short, this process promises to filter the signal from the deafening noise.

But all this brilliant, hard-won intelligence is worthless until the final stage: Mobilization. This is where the plan meets reality, where security requests leave the CISO’s dashboard and land in the overflowing queues of other departments. And all too often, this is where progress grinds to a halt.

Mobilization is the embodiment of organizational friction. It is a stage of cross-functional collaboration, but for many, it’s a stage of cross-functional conflict. It requires orchestrating action across IT operations, cloud engineering, application security, DevOps, and line-of-business owners, all of whom have their own priorities, workflows, and performance metrics. The success hinges on breaking down organizational silos, but this remains the most formidable barrier to true security agility.

Think of what mobilization actually entails in a large enterprise. A critical vulnerability is validated on a mission-critical, internet-facing payment system. What happens next?

- A high-priority ticket is created in an ITSM system like ServiceNow.

- It’s assigned to an IT operations team already struggling with its own backlog of performance and stability projects.

- The proposed patch must be tested in a pre-production environment, which may not perfectly mirror the production setup. This testing takes time and resources away from other tasks.

- A maintenance window must be negotiated with the business unit, which is loath to take a revenue-generating system offline.

- The patch is deployed, often with a prayer that it doesn’t introduce new instability or break a critical dependency.

- This entire process can take weeks or even months. Throughout that time, the window of exposure remains wide open, a silent alarm that security leaders can, paradoxically, hear remarkably clearly.

This is the tyranny of the final mile. It’s a process laden with manual effort, political capital, and unacceptable delays. Even the most advanced exposure management platform can only streamline the ticketing and tracking; it cannot eliminate the fundamental, time-consuming work of patching or reconfiguring a live system.

We have, as an industry, accepted this friction as a cost of doing business. But what if we could refuse to pay it?

From Continuous Motion to Autonomous Action: Reimagining Remediation

My philosophy is built on the belief that we can, and must, eliminate this friction. A singular, obsessive focus shapes this perspective: Making the exploitability of a vulnerability irrelevant.

This statement is the very essence of an exposure management strategy architected for the speed of modern threats. It doesn’t pivot around finding and prioritizing exposures but around neutralizing them as promptly as possible, at the point of attack, without ever touching the code or scheduling a moment of downtime. That isn’t a fantasy; it’s the reality of autonomous workload protection and the promise of the OTTOGUARD.AI platform.

OTTOGUARD.AI doesn’t merely participate in the CTEM life cycle. Instead, it provides a powerful shortcut through its most challenging and time-consuming stage. By focusing on deterministic, application-aware protection at runtime, we can move beyond vulnerability mitigation to a state of proactive, holistic autonomous exposure mitigation.

Let’s re-examine the five stages of the CTEM lifecycle through this transformative lens.

Stage 1: Scoping

Scoping is the strategic foundation of a CTEM program, defining what is most critical to the organization. This business-centric exercise remains essential.

However, the presence of an autonomous mitigation capability changes the risk calculus. When you know you can render entire classes of vulnerabilities unexploitable on your most high-value assets, you can approach scoping with greater confidence. You can make more nuanced decisions about risk acceptance, knowing you have a powerful compensating control that acts as a definitive failsafe.

Stage 2: Discovery

Comprehensive discovery is non-negotiable. You must have a living database of every asset, identity, and software component within your environment, from the cloud to on-premises servers.

Tools like EASM (external attack surface management) and CAASM (cyber asset attack surface management) are critical for providing this visibility. OTTOGUARD.AI’s approach complements and consumes this intelligence, providing deep visibility into the software supply chain at the workload level to understand every library, process, and file in use. The result is a richer, more granular discovery process that maps not just assets, but the very DNA of the applications running on them.

Stage 3: Prioritization

This is where CTEM delivers immense strategic value. It moves further than CVSS scores to a risk-based model that incorporates threat intelligence and business criticality.

Autonomous mitigation supercharges this stage. The prioritization calculus is fundamentally altered. Imagine a vulnerability with a CVSS score of 9.8 is discovered on a critical system. The traditional response is a fire drill. With autonomous runtime protection, however, you can verify that the technique required to exploit this flaw is blocked by default. The vulnerability, while technically present, is no longer an exposure. Its risk score plummets.

You can now de-prioritize the patch, treating it as a routine maintenance item instead of an emergency, freeing up immense resources to focus on genuine, unmitigated risks. You can finally stop chasing numbers and start reducing actual, measurable risk.

Stage 4: Validation

The validation stage, which uses tools like BAS (breach and attack simulation) to test whether adversaries can leverage exposures, serves as a crucial reality check.

With autonomous mitigation, the goal of validation shifts profoundly. Instead of just confirming that a risk exists, you can run attack simulations to prove that your autonomous controls are working. You can demonstrate to auditors, executives, and yourself that even with a known, unpatched vulnerability, the attack path is severed. You are no longer validating the risk; you are validating your resilience.

Stage 5: Mobilization

This stage is where the perspective changes most dramatically. The slow, painful, cross-functional process of patching and remediation is short-circuited. Instead of mobilizing teams to schedule downtime and deploy a patch, you apply a protective control that neutralizes the threat class entirely.

The corollary is that you no longer measure the MTTR (mean time to remediate) for a critical, validated exposure in days, weeks, or months. You measure it in the milliseconds it takes for OTTOGUARD.AI’s protection probe to block an illegitimate action. That’s not just attack surface reduction; it’s an attack surface nullification for entire classes of threats, achieved without changing a single line of code.

This patchless, autonomous approach is particularly transformative for the areas causing the most profound and persistent pain for security leaders:

- Legacy and unpatchable systems: Organizations typically manage these with controls like network segmentation. Autonomous runtime protection offers a far more robust and granular solution, effectively shrink-wrapping these fragile but often critical systems in a protective shield, extending their life securely and bringing them into compliance.

- Zero-day and N-day attacks: CTEM focuses on known exposures, but true resilience means preparing for the unknown, too. Since OTTOGUARD.AI makes sure that applications only execute their intended and verified code, it can prevent the exploitation of vulnerabilities that the cybersecurity community hasn’t discovered yet. It stops the malicious technique, regardless of the specific flaw being targeted.

- Software supply chain security: With modern applications assembled from a stack of third-party and open-source components, understanding your true threat exposure is incredibly difficult. Autonomous mitigation allows you to enforce the integrity of your software at runtime, which prevents malicious or compromised components from deviating from their intended function, effectively locking down the workload from the inside.

Strategic AI for Operational Efficiency

The cybersecurity industry is rightly focused on the potential of artificial intelligence to handle the scale of modern threats. The conversation is buzzing with concepts related to AI-driven security and machine-speed defense.

I believe, however, that the most powerful application of AI is not merely about speed in detection and response but about strategic, practical application. This means security operations should be made savvier, not just faster.

This philosophy is central to how we designed OTTOGUARD.AI. We must be clear: Our core protection is not based on AI. The fundamental defense is a deterministic, zero-trust technology that preemptively blocks malicious code from executing, regardless of whether the threat is known or unknown. This foundation provides absolute security at the workload level.

We then apply agentic AI for a very specific and powerful purpose: to revolutionize operational efficiency and seamlessly integrate our platform into an organization’s unique security ecosystem. Our AI agents act as a sophisticated operational layer, automating the complex, manual tasks that consume your team’s valuable time.

Here is what they do in practice:

- Automate deployment and configuration: They can autonomously deploy and configure the Virsec probe into your server infrastructures, learning the environment to determine what code, processes, and software to trust without requiring human intervention.

- Intelligently integrate with your tool stack: They are designed to connect with your existing security solutions. The agents pull vulnerability data from scanners and asset information from management platforms, allowing OTTOGUARD.AI to understand your risk landscape and determine which assets can be immediately protected without patching.

- Streamline remediation workflows: For the critical exposures that our patchless mitigation can secure, the process is instant. For the few that still require a traditional patch, our agents interface with ITSM solutions to automate instructions and streamline the workflow, ensuring your security team misses nothing.

Crucially, this is all achieved with humans firmly in control. The AI operates within clearly defined guardrails and requires human authorization or preapproved conditions before executing critical actions. It serves as a tactical execution engine. After a human provides the strategic directive, the AI autonomously orchestrates the optimal technical path to reach the desired outcome.

The goal is to unburden security professionals from routine, burnout-inducing tasks, freeing them to focus on areas such as proactive adversary simulation and architectural hardening.

Building a Resilient Future: A Roadmap for Leadership

Adopting this new security approach is a strategic journey that requires more than new technology. Executive sponsorship, a commitment to cultural change, and a deliberate, phased execution are all essential parts.

As a leader, your role is to advocate this transition, framing it as a fundamental investment in business resilience and operational continuity. The path forward should be a pragmatic one, building momentum and demonstrating value at each step.

- Phase 1: Foundation and quick wins (months 0-6): Begin with a narrow but critical scope, such as your external-facing applications or a single high-value business system. Use this phase to establish foundational discovery and prioritization, but immediately apply autonomous mitigation to this core set of assets. The objective is to achieve quick wins by demonstrating a quantifiable reduction in exploitable risk on your crown jewels, showcasing a near-zero MTTR for critical threats, and building incredible credibility for the program.

- Phase 2: Expansion and integration (months 6-18): Broaden the program’s scope to include additional business units and critical legacy systems. In this phase, mature your validation processes, using BAS tools to prove the efficacy of your autonomous controls continuously. Deepen integrations, feeding the intelligence from your now-protected environment back into your overarching exposure management platform to enrich its data and refine its prioritization models.

- Phase 3: Optimization and autonomy (months 18+): At this stage, the program should be a well-oiled, continuous loop, but one where the mobilization phase for critical workloads is fully automated. The focus shifts to optimizing this new, highly efficient model. You can now use the human expertise you’ve freed from the patching treadmill to focus on different initiatives: maturing your threat hunting, refining your security architecture, and providing data-rich risk dashboards directly to business leaders. Your security budget is no longer justified by fear, but by a clear, evidence-based case for strategic investment in enterprise resilience.

Staying one step ahead is no longer a function of having the fastest human responders. It is the result of building a proactive, predictive, and adaptive security program that is deeply and seamlessly integrated with the business it protects. The shift to a continuous threat exposure management mindset is the right one. It moves us from chasing individual vulnerabilities to dismantling strategic attack paths. However, we must not stop there; we must aggressively challenge the ingrained assumption that remediation must always be a slow, painful, manual process.

The ability to win the race lies in combining the intelligence of an exposure management framework with the speed, precision, and decisiveness of autonomous threat exposure mitigation. By doing so, we can finally break free from the reactive cycle and build a security posture that is not just continuous, but truly, demonstrably, and permanently resilient.

Ready to perfect your CTEM program? Eliminate the remediation bottleneck. Book a demo to see how OTTOGUARD.AI delivers immediate, patchless protection.

Frequently Asked Questions

Continuous exposure mitigation is an evolution of traditional vulnerability management. VM focuses on discovering and patching known software flaws (CVEs), often prioritized by scores like CVSS.

Where continuous exposure mitigation excels is in taking a broader approach. It is a strategic program that allows you to continuously identify, prioritize, validate, and mitigate the full spectrum of security exposures — including misconfigurations, identity flaws, and exploitable attack paths — that pose a genuine risk to business-critical assets.

The key difference is the change in perspective: from an inefficient technical exercise of fixing every bug to a business-aligned strategy of fixing what matters most, achieved by focusing on how an adversary would compromise critical operations.

By aligning with established frameworks like those from the NIST (National Institute of Standards and Technology), CTEM provides a practical methodology for implementing core security principles.

For instance, the CTEM lifecycle directly maps to and fulfills the requirements of the NIST Risk Management Framework (RMF), from the “Prepare” step (supported by scoping) to the “Monitor” step (which is the essence of a continuous program). The “Validation” stage, using tools like Breach and Attack Simulation, provides an ongoing, evidence-based assessment of control effectiveness, far exceeding traditional periodic audits.

All this gives organizations a living, real-time dashboard of their security and compliance posture, making it significantly easier to demonstrate due diligence to auditors, regulators, and partners.

Yes, and this is one of its most critical applications. Legacy systems may be unpatchable or lack modern APIs for integration. A robust continuous exposure mitigation strategy addresses these problems head-on.

CTEM can serve as a basis for developing a long-term roadmap to phase out these systems while managing them with compensating controls in the interim. For instance, autonomous workload patchless mitigation can provide a virtual shield around legacy software, preventing the exploitation of vulnerabilities at runtime without any code changes. This way, it extends the operational life of critical legacy software, bringing it under the umbrella of a modern security strategy.