Agentic AI security wasn’t just one of the many topics at RSAC 2025. It was a fundamental theme that cut across keynotes, technical sessions, and the expo floor. Is there better proof of the growing and critical importance of this topic for the future of cybersecurity?

However, not everyone sees agentic AI as an unquestionable driving force forward in cybersecurity. Saying that it’s polarizing is too strong. But it’s definitely a subject that raises many questions and spirited but meaningful discussions. A case in point: Recent reports have revealed that 98% of organizations plan to expand their use of AI agents within the next year, yet 96% also recognize them as a growing security risk.

Reality is always much more nuanced and complex, but, in general, there are two opposing views about agentic AI in cybersecurity:

- Optimistic: Agentic AI is a groundbreaking improvement that offers autonomous capabilities to improve security operations and decision-making in cybersecurity.

- Skeptical: Agentic AI’s autonomy widens the attack surface and introduces novel complexities, adding to existing security problems.

I strongly endorse the first view, and I’ll clarify why and how agentic AI security is a force forward in the context of exposure management. But first, let’s see what makes AI agentic.

What Makes AI Agentic?

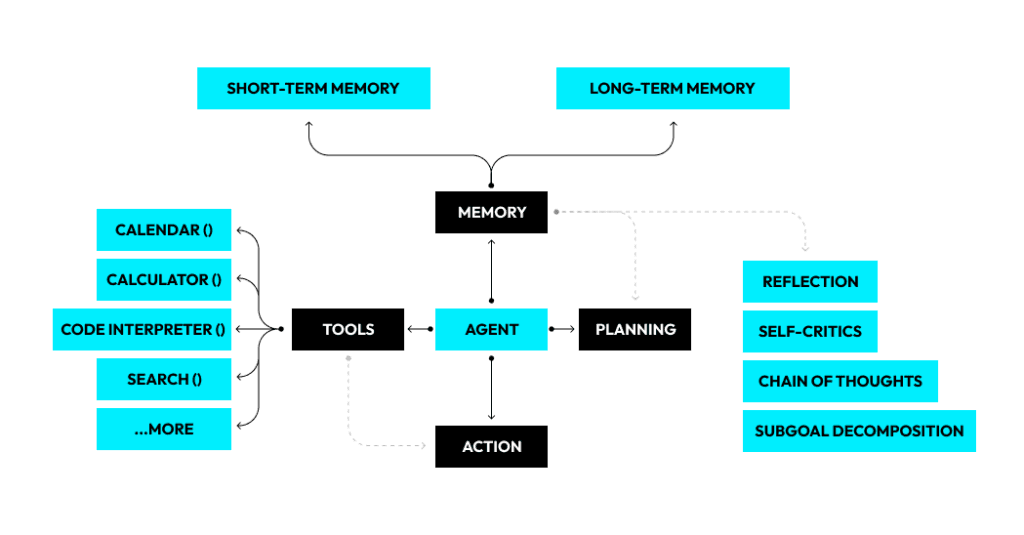

You can call artificial intelligence systems agentic if they can:

- Act autonomously: Initiate actions and pursue objectives with minimal human oversight.

- Reason: Interpret tasks, select solutions, and make decisions based on contextual clues.

- Adapt plans: Modify plans and break down complex tasks into manageable steps in response to changing conditions.

- Execute and chain actions: Deliver solutions through direct action, using integration with external tools and APIs, and sequence multiple actions in response to a single request.

- Learn continuously: Constantly refine performance and adjust to new information through advanced learning paradigms, like supervised, unsupervised, and reinforcement learning.

- Maintain memory: Keep an internal state across interactions and workflows, enabling them to recall past actions and inform future decisions.

These characteristics together make agentic AI distinctive, unlike any other AI and mere automation, which is achievable through as simple a thing as scripting. They elevate AI systems to true agency, enabling independent problem-solving and strategic adaptation for dynamic, multi-step organizational challenges.

It’s worth noting that, typically, agentic AI platforms consist of an LLM that orchestrates multiple agents. This architecture allows them to manage long-term objectives, dynamically orchestrate actions, coordinate integrations, and make decisions using real-time telemetry.

Agentic AI Security Defined Clearly

What exactly is agentic AI security?

The answer to this question is not as straightforward as many of us would like it to be. It’s important to understand right from the start that this term can refer to two related but different realities.

Security of AI Agents

The first reality is the security of AI agents or the lack thereof.

The point of publications like OWASP’s “Agentic AI – Threats and Mitigations” is precisely to draw attention to the most common and severe security risks of AI agents, regardless of whether you use them in cybersecurity, finance, or any other industry.

Definition 1: Agentic AI security is the specialized cybersecurity field dedicated to safeguarding autonomous artificial intelligence systems, distinguished by their ability to understand goals, formulate multi-step plans, make decisions, and execute actions with little to no human intervention, often by interacting with external tools and APIs.

The security of AI agents has become a separate concern, non-reducible to other cybersecurity disciplines, such as network security or API security. It addresses the unique risks and attack vectors intrinsic to AI agents’ autonomy and dynamic behavior.

To get a glimpse of what insecure AI agents look like, you can look at a few of OWASP’s intentionally vulnerable agentic AI apps. Built on frameworks like LangChain, LangGraph, AutoGen, and CrewAI, they’re deliberately developed with insecure code or misconfigurations within their core.

Security by AI Agents

The second reality is the use of AI agents in cybersecurity to improve:

- Exposure management

- Vulnerability management

- Security operations

- Threat intelligence

- Governance, risk, and compliance

- Secure software development

- Identity and access management

- Offensive security testing

- Security posture management

Definition 2: Agentic AI security is a cybersecurity approach where AI-powered agents operate autonomously, driven by predefined security goals, to proactively perform and adapt to tasks such as continuous threat monitoring, intelligent detection, automated response, and rapid mitigation, or others, often learning and refining their actions over time with little to no direct human intervention.

The benefits of applying agentic AI to cybersecurity are efficiency, effectiveness, scalability, and speed. These make it especially valuable and relevant to high-volume environments, where:

- Manual and strictly human workflows cannot have the desired effect, to say the least.

- There’s a pronounced gap between the workload and the workforce.

The benefits of agentic AI cybersecurity, however, remain largely potential and aspirational, as agentic AI has not yet achieved its full promise in cybersecurity. Its fully realized deployment in complex enterprise security environments remains nascent, with many organizations still in the early adoption phases.

Besides, agentic AI still leans on human oversight, operating within defined guardrails and often requiring pre-approval for critical actions, thus augmenting rather than completely replacing manual workflows.

Nevertheless, those four benefits of agentic AI are what make many in the cybersecurity community excited about the future.

Here, I’ll focus on the second reality: Agentic AI as a solution, not a problem. However, I will not leave out the other side of the story: I’ll discuss some problematic aspects of AI agents as well.

A Concrete Use Case for Agentic AI in Exposure Management

Most of us start with what’s closest to them, and I’ll do the same: I’ll describe how OTTOGUARD.AI, Virsec’s zero-trust platform for workload patchless mitigation, applies agentic AI to exposure management.

Virsec’s Core Defense

OTTOGUARD.AI’s core protection mechanism remains a proprietary zero-trust, “lock down by default” technology that does not rely on AI for its fundamental defense. That means its ability to proactively prevent malicious code from executing is independent of agentic or, for that matter, any other type of AI.

OTTOGUARD.AI.’s defense is about preemptively closing off all human and non-human execution paths by default. This is what allows it to stop AI-driven malware or rapid exploitation of vulnerabilities, as it doesn’t depend on identifying signatures or reacting to known attack patterns.

The Role of Agentic AI: Enhancing Operational Efficiency

Virsec moves beyond the common perception of AI in cybersecurity as merely a faster detection and response mechanism.

Instead, it employs agentic AI to enhance the autonomy, integration, and operational efficiency of its security solution within an organization’s existing cybersecurity ecosystem. Think of the AI agents it uses as a swarm of little jellyfish tentacles, each performing a specific function to automate tasks and facilitate communication.

The Key Functions of AI Agents

The AI agents:

- Automate deployment and configuration: They autonomously deploy the Virsec probe into target server infrastructures and configure it, determining what code, processes, and software to trust and what not to trust without human intervention.

- Integrate with existing security tools: They pull vulnerability data from solutions like Tenable, Qualys, Rapid7, or CrowdStrike Falcon or asset information from Armis or Axonius. That allows OTTOGUARD.AI to understand the customer’s existing risk landscape and determine which assets it can protect immediately without patching.

- Automate remediation workflows: After determining which vulnerabilities Virsec needs to protect against without patching—up to 90% of your critical exposures—the agents can then interface with ITSM solutions like ServiceNow or JIRA. That enables them to provide automated instructions on how to fix and mitigate the remaining vulnerabilities that still require patching.

Transformative Data Processing and Integration

This way, agentic AI eliminates the need for complex, manual integrations and predefined playbook creation often seen in SOAR solutions. It can process and make sense of security-relevant information as pure data, regardless of its source or traditional categorization.

Moreover, agentic AI excels at integrating new data sources, even those with unknown schemas. It analyzes schemas and makes data usable in minutes, effectively combining previously distinct cybersecurity functions like SIEM for data aggregation and SOAR for automation.

Intuitive Natural Language Interaction

Beyond data processing, Agentic AI enables natural language interaction. Users can query data and initiate actions simply by expressing a desired outcome without needing to know specific query languages (like SQL) or which underlying tools to invoke.

That moves interaction beyond complex dashboards and buttons to an intuitive, outcome-driven experience via a simple prompt or even potentially via conversation. Users ask for outcomes, like “What is my security posture compared to yesterday?” The agentic AI provides the relevant information and takes actions autonomously but within predefined guardrails.

Different Outcomes for Different Roles

Different roles within an organization receive different outcomes. For instance:

- CISOs want to know their security posture, KPIs, and projections for board meetings.

- Security analysts need to know about open tickets, relevant information, and required actions.

- Managers want updates on new vulnerabilities and patching requirements.

The agentic AI provides all the different outcomes directly, streamlining everyone’s daily tasks. Say a security analyst wakes up, goes to work, and sees that a ticket is already opened and populated with all the necessary information, requiring only an action.

The Ultimate Goal: Unburdening Security Professionals

The ultimate goal is to streamline security operations to the degree that human professionals are freed from routine, mundane, and burnout-inducing tasks. That will allow them to focus on higher-value, strategic work as the agentic AI continuously configures, integrates, and manages operations autonomously in the background.

But Isn’t Autonomous Dangerous?

Virsec doesn’t remove human supervision. AI agents execute only after a human has provided authorization or their execution has met preapproved conditions.

For this purpose, it implements guardrails—explicit limitations and controls—that define the scope of what AI agents are allowed to do, such as read from a database but not write to it. AI agents operate under a level of supervision built into the code that dictates their permissible actions. This approach guarantees a cautious and controlled use of agentic AI.

In this case, an AI agent functions more as a tool picker than an autonomous decision-maker. It is simply not designed to make critical security decisions. Given a specific task or question and a set of available integrations, the AI agent’s role comes down to autonomously selecting the most suitable tools and solutions to achieve the most desirable outcome.

In other words, the human sets the strategic “what,” and the AI autonomously figures out the most efficient “how” by selecting the right tools and executing preapproved actions.

At its core, OTTOGUARD.AI makes deterministic decisions, where software can be either good or bad, not probably good or bad. And that’s as far from probabilistic AI decisions as you can get.

In a nutshell, the ultimate choice—what to allow, what not—resides with the customer, underlining the necessity for humans in the loop even in increasingly autonomous systems.

Can You Trust Agentic AI Security?

Yes, AI hallucinations do happen, and AI agents make mistakes. However:

- How is that different from all the other software we’ve used so far?

- How is it different from what even the humans with the highest expertise do sometimes?

- Since when has infallibility become the single most important criterion for usefulness?

As the success rates of social engineering campaigns suggest, human error is one of the gravest and most unpredictable problems in cybersecurity. And the situation with the human factor in exposure management is no different.

Consider a typical day in the life of a security analyst. Overwhelmed by manual workflows, deafening waves of alerts, and mundane tasks, a security analyst may miss important details and IoCs and make an erroneous decision under stress due to alert fatigue or burnout.

Circling back to Virsec, its agentic AI is highly specialized and trained on specific cybersecurity data. That enables it to execute functions and provide accurate information with remarkable consistency and speed, surpassing human capabilities for such tasks, which is, honestly, one of the main reasons why humans started developing and training AI models in the first place.

Achieving precision is not trivial. It demands monumental ongoing effort in training models, cleaning data, and establishing robust feedback loops. And to further enhance accuracy, the system also incorporates suggested prompts or asks qualifying questions when user queries are ambiguous, interactively refining intent and precision.

Virsec AI agents’ narrow focus on cybersecurity tasks allows for strict control over data quality, a core promise to build user trust, and deliver a reliable AI that functions as a highly competent security expert minus the (alert) fatigue.

These expert security AI agents can process petabytes of data 24/7 with continuous eyes on events, thereby decreasing the likelihood of bad outcomes compared to human operators alone.

Besides, a machine error, a bug, can be much more easily resolved after being identified and understood.

That positions AI as a more consistent and reliable decision-maker for high-volume, repetitive tasks and an accountability layer that continuously checks the accuracy of decisions, minimizing human error and fatigue.

Final Thoughts

The discussions at RSAC 2025 and the broader industry sentiment confirm that agentic AI is not an ephemeral trend but a foundational shift in cybersecurity.

While the path to fully autonomous security is nuanced and fraught with questions, the potential it offers for exposure management and beyond is too significant to ignore. The tension between its transformative potential and unique risks drives us to adopt a strategic, well-governed approach.

Virsec’s OTTOGUARD.AI was born out of this pragmatic vision, showing how agentic AI can revolutionize operational efficiency without sacrificing human control. By offloading repetitive tasks that overwhelm human analysts, agentic AI improves exactness, streamlines cybersecurity processes, and elevates the human role to strategic oversight and complex problem-solving.

Agentic AI is the future of cybersecurity, but its role is not to replace human expertise but to augment it with intelligent, autonomous systems that can operate at a scale and consistency impossible for humans alone.

The debate must move beyond whether AI makes mistakes—all software and humans do—to how AI’s predictable errors can be systematically managed and improved, offering a more reliable alternative to the unpredictable nature of human fatigue and oversight.

Agentic AI offers a new frontier for exposure management: A brilliant opportunity to maintain continuous vigilance over data and systems, proactively decrease the likelihood of adverse security outcomes, and forge a new level of trust in cybersecurity autonomy.

Discover OTTOGUARD.AI: Optimize your exposure management through powerful patchless mitigation and agentic AI capabilities.

FAQs

Agentic AI differentiates itself by its ability to autonomously understand goals, formulate multi-step plans, adapt, execute chained actions with external tools, learn continuously, and maintain memory, far exceeding basic scripting or single-task AI.

Trust in agentic AI comes from its high specialization in cybersecurity data, which enables remarkable consistency and speed, and from the fact that its identifiable machine errors can be systematically resolved.

Agentic AI augments human security professionals by automating high-volume, mundane tasks, but it operates within defined guardrails and requires human authorization or preapproved conditions for critical actions, thus maintaining human oversight and strategic control.