The operational friction between vulnerability disclosure and successful remediation is a permanent and quantifiable source of enterprise risk.

Current vulnerability management programs are a necessary component of cyber hygiene. However, they too often prove to be structurally incapable of keeping pace with the velocity of exploit weaponization and modern software development. The institutional reliance on the standard cycle of scanning, prioritizing, and patching creates a persistent window of exposure that adversaries are in a position to consistently and successfully exploit.

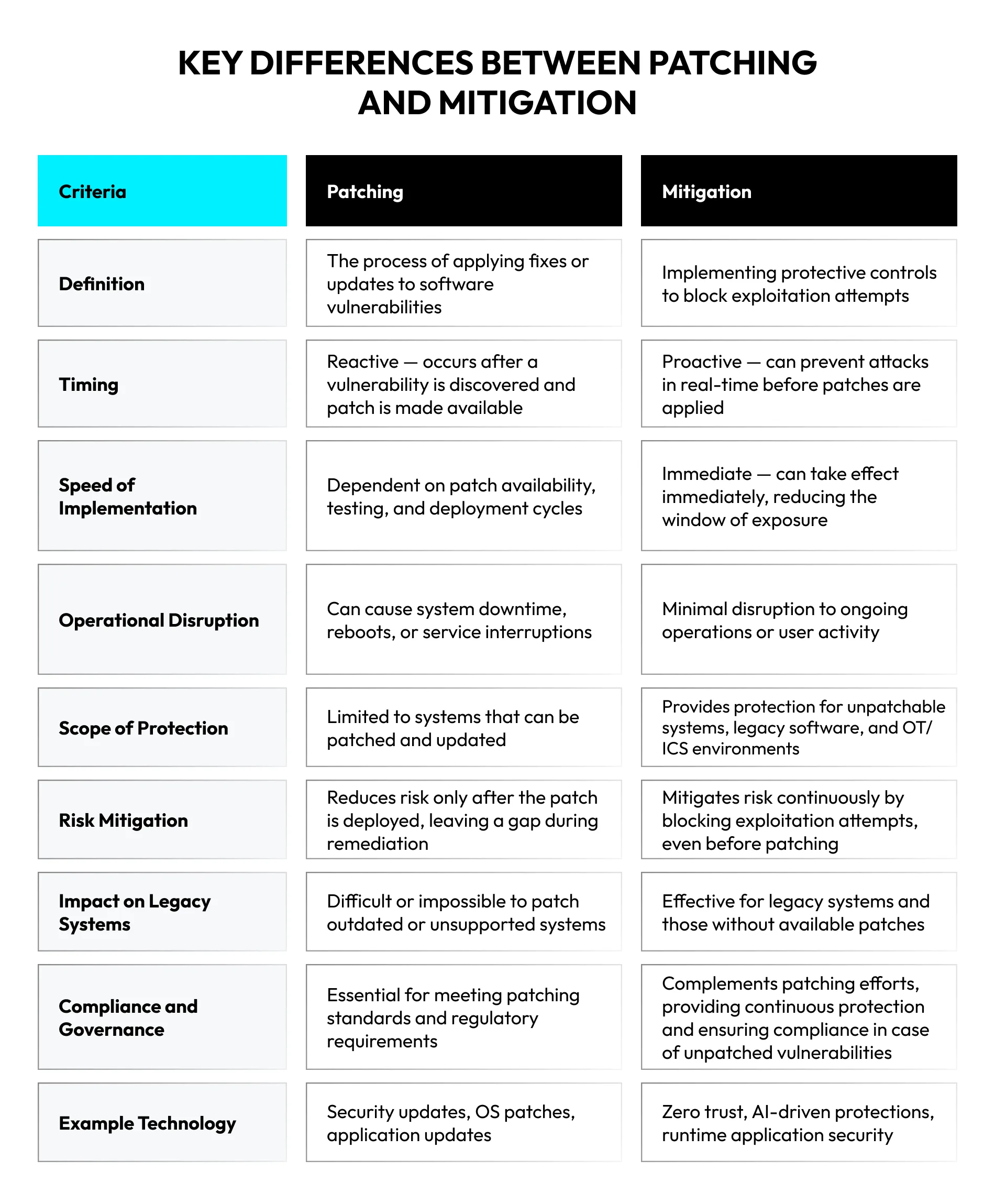

This state of affairs demands a prompt, near-real-time exploit prevention in the form of mitigation. And effective vulnerability mitigation strategies must function as a durable, compensatory control, neutralizing threats at the point of attack. Moreover, they must be able to accomplish stable protection independent of patching cycles.

Vulnerability mitigation should not be seen as a counterthesis to or replacement of diligent patch management. Instead, it is a critical architectural component that buys back time, reduces operational burden, and most importantly secures assets that are otherwise indefensible.

The following analysis expounds an architectural approach for such a strategy — one founded on the principles of zero trust, applied artificial intelligence, and autonomous protection.

The Limits of Patch-Centric Security

The mandate to patch every critical CVE is an operational fiction. For any large enterprise, this practice is untenable due to a few systemic, interwoven constraints.

The Remediation Debt and the Patch Gap

The number of disclosed vulnerabilities vastly exceeds the operational capacity of security and IT teams. This disparity creates a constantly growing backlog of unpatched systems — a form of technical debt that directly translates to security risk.

Prioritization using CVSS scores or known exploited vulnerabilities catalogs is a necessary triage measure, but it is not a strategy. It accepts a considerable degree of risk for all vulnerabilities not yet at the top of the list, and it cedes the initiative to the attacker, who dictates the priority list. This patch gap, the duration between a vulnerability’s disclosure and its remediation, is a period of maximum risk.

Accelerated Exploit Weaponization

The time from vulnerability disclosure to the availability of a functional exploit has collapsed drastically. Threat actors now rapidly productize and integrate sophisticated exploits, once the domain of nation-states, into RaaS (ransomware-as-a-service) platforms.

This exploit “trickle-down” democratizes advanced attack capabilities, making every organization a potential target for threats that were considered rare in the past. A patch-centric model cannot operate at this speed.

Operational Disruption and Patch Validation

The patching process is inherently disruptive. It frequently requires application downtime and system reboots, creating direct conflict with business continuity and uptime requirements.

For mission-critical systems in finance, manufacturing, or healthcare, the cost of this disruption can even outweigh the perceived risk of a given vulnerability, leading to the indefinite deferment of patches.

Moreover, patches themselves can introduce new risks; breaking application functionality or creating unforeseen dependencies are two conspicuous examples. This need to test patches extensively before deployment further extends the patch gap and adds to the operational burden on IT teams.

Asset Incompatibility

A considerable portion of enterprise assets cannot be reliably or promptly patched. This includes industrial control systems (ICS), operational technology (OT), and embedded devices with long service lives and infrequent maintenance windows.

Additionally, legacy vulnerabilities in EOL (end-of-life) software and operating systems persist with no available vendor patches, leaving them as permanent, unfixable fixtures in the attack surface.

These factors demonstrate that a strategy centered on remediation alone is fundamentally flawed. It practically guarantees a state of constant vulnerability and operational friction.

Mitigation as a Primary Control

A more resilient security architecture, suitable for the rough contemporary cyber terrain, treats vulnerability mitigation as an essential control instead of a temporary stopgap. This perspective regards mitigation as a form of remediation, as argued in this vulnerability mitigation vs. remediation discussion.

This perspective aligns directly with established protocols such as the NIST Cybersecurity Framework’s “Protect” function (PR.PT-5), which calls for entities to implement protective technologies to ensure system security.

An effective vulnerability mitigation process must be implemented directly at the point of risk: the workload itself. Its primary function is to block the techniques that make exploitation possible, such as arbitrary code execution, memory corruption, or unauthorized process injection.

This approach diverges from the perimeter-focused philosophy of network-level controls like WAFs, which lack runtime context — a weakness adversaries often exploit with sophisticated evasion techniques.

For example, adversaries bypass WAFs by using obfuscation techniques, such as multi-layer encoding, to disguise malicious commands. Lacking runtime context, a WAF inspects only the garbled, pre-processed payload and is often blind to novel evasion methods that don’t match its known patterns.

In contrast, a runtime protection tool operates within the application to observe the command after the server has decoded it to its true, malicious form. This fundamental blindness to the internal decoding process is the direct causal link for the bypass, as a runtime tool can block the actual, executable threat that a WAF cannot see.

By decoupling the act of protection from the act of patching, this workload-centric approach provides immediate risk reduction for newly disclosed vulnerabilities and offers a permanent security solution for unpatchable systems.

Implementing the Full Scope of Zero Trust

The benefits of a zero-trust approach are compelling. However, the fundamental principle of zero trust, “never trust, always verify,” is often limited to network access and identity management (ZTNA). And that’s insufficient.

Beyond the Authenticated Session

ZTNA authenticates access to an application, but once that trust is granted for a session, it has no visibility or control over what happens inside the application’s execution space. This crevice is a critical blind spot that attackers exploit for lateral movement, privilege escalation, and in-memory attacks.

A complete zero-trust strategy must extend to the execution level, i.e., the application runtime itself. A deterministic control model is a prime example of zero-trust implementation at this level.

The Principles of Deterministic Zero Trust

Many security tools operate on a probabilistic model; they hunt for anomalous behaviors or signatures of known-bad activity. These methods are inherently prone to false positives and can be bypassed by novel attack vectors. A more effective zero-trust architecture employs a deterministic protection model based on an intimate understanding of the application’s correct behavior.

It achieves this function by mapping the application’s legitimate structure and execution paths. It analyzes and maps the application’s code, libraries, processes, memory usage, and permissible sequences of operations. This map constitutes the “known-good” operational baseline.

At runtime, a lightweight agent enforces this baseline. Any attempt to deviate is a direct violation of the deterministic model. The agent immediately terminates the malicious action before it can execute.

By creating and enforcing a granular map of legitimate application behavior, it inherently operates on a default-deny posture for all unauthorized actions within the workload.

This control model does not trust that a process is benign simply because an authenticated user has initiated it. In contrast, it verifies that every single operation aligns with the established, approved execution flow. That prevents attackers from co-opting legitimate software components to carry out their objectives, effectively containing the blast radius of any potential intrusion.

Consider the Log4j vulnerability.

A deterministic control would not simply block the outbound LDAP request. It would prevent the initial, more fundamental violation: the Java process attempting to spawn a shell or load an unauthorized remote class. This action is not part of the application’s established behavior map, so a deterministic zero-trust solution blocks it immediately, neutralizing the exploit at its root, regardless of the specific payload or evasion technique used.

The Role of Agentic AI in Mitigation

The application of AI in vulnerability mitigation is maturing. Early models concentrated on using machine learning to spot anomalies in large datasets, an approach that still relies on analyzing past threats.

The current tendency is to move forward to a more advanced application of AI that enhances the efficiency and integration of the entire security ecosystem. This goal is achieved through agentic AI, a class of AI that can autonomously pursue objectives, adapt plans, and orchestrate complex tasks.

Apropos of vulnerability mitigation, agentic AI is used to advance two key areas: vulnerability management and security operations.

Advanced Vulnerability Management

Agentic AI transforms vulnerability management from a set of largely manual workflows and siloed processes into an integrated whole.

It acts as an intelligent hub, which connects with an organization’s scanners and asset management platforms to create a unified, contextual view of risk. It then interfaces with ITSM solutions to automate remediation workflows, from ticketing to resolution.

SOC Augmentation

The primary benefit of this approach is augmenting the Security Operations Center (SOC). Agentic AI functions as a force multiplier by handling the high-volume, repetitive tasks that cause analyst burnout.

Operating within strict, human-defined guardrails, an agent can be tasked with a strategic goal, and it will autonomously determine the most efficient way to achieve it. This frees human experts to focus on high-level analysis and complex threat resolution.

Autonomy Within Boundaries

Granting artificial intelligence autonomy in a sensitive field like security is understandably a source of concern. However, a properly implemented system avoids giving AI unrestricted power. The approach is not about total AI control, but about giving AI agents the freedom to work independently within a set of firm and clear boundaries.

These boundaries are operational rules that dictate the agent’s permissions. For example, a rule might allow an agent to query a log database for signs of an attack but strictly prohibit it from deleting or changing any of that data. Any action that is deemed critical — like isolating a server from the network — would still require explicit approval from a human operator or have to meet a strict set of pre-authorized conditions.

That creates a partnership where the security team sets the high-level strategy and goals, and the AI agent determines the best tactics to achieve them. Instead of making critical strategic judgments, the AI acts as an intelligent assistant, choosing the right pre-approved method from its toolkit to execute a task efficiently.

In this model, the human remains the essential pilot, guiding the overall strategy and making the critical judgments that require experience and intuition. The AI, in turn, acts as the tireless co-pilot, handling the demanding operational details at machine speed and freeing up the human expert to focus on what matters most.

Closing the Window of Exposure: The Case for Autonomous Mitigation

Relying on a patch-dependent security strategy is no longer a sustainable option. A more effective security architecture integrates three core components:

- Direct, workload-centric mitigation to block exploits

- Deterministic zero-trust model to control application behavior

- Agentic AI to automate and orchestrate security operations.

Together, these elements create a security posture that can actively protect systems regardless of their patch status. A posture that gives control back to the security team and decidedly closes the gap between vulnerability and exploit.

Book a demo with OTTOGUARD.AI to see how workload patchless mitigation strengthens your vulnerability mitigation strategy and reduces enterprise risk.

FAQs

Yes. That is a primary and critical function of advanced vulnerability mitigation strategies. Since they focus on preventing the act of exploitation rather than modifying the vulnerable code, they are an effective and often permanent compensatory control for systems where patches are unavailable or impractical to apply. These include:

- Legacy software

- EOL operating systems

- Specialized OT/ICS environments where uptime is paramount.

Through a combination of risk reduction, operational efficiency, and financial impact metrics, such as:

- A reduction in the number of critical vulnerabilities requiring emergency, out-of-band patching.

- The mean time to protect (MTTP) for new zero-day vulnerabilities.

- A decrease in operational downtime associated with both security incidents and unplanned patching.

- The number of security incidents related to the exploitation of known but unpatched vulnerabilities.

- Evidence of compliance with frameworks like NIST CSF.

They act as the “protect-now” layer within exposure management, closing risk as soon as it’s found. That aligns with a mitigation-first approach, where runtime controls block exploits immediately, reduce mean time to protect, and secure even unpatchable OT/ICS and legacy systems.